Home > Seminars/Conferences > Colloquium and conferences > Colloquium and Workshops > Paris-Berkeley Workshop on Probability and Meaning | June 28-29, (...)

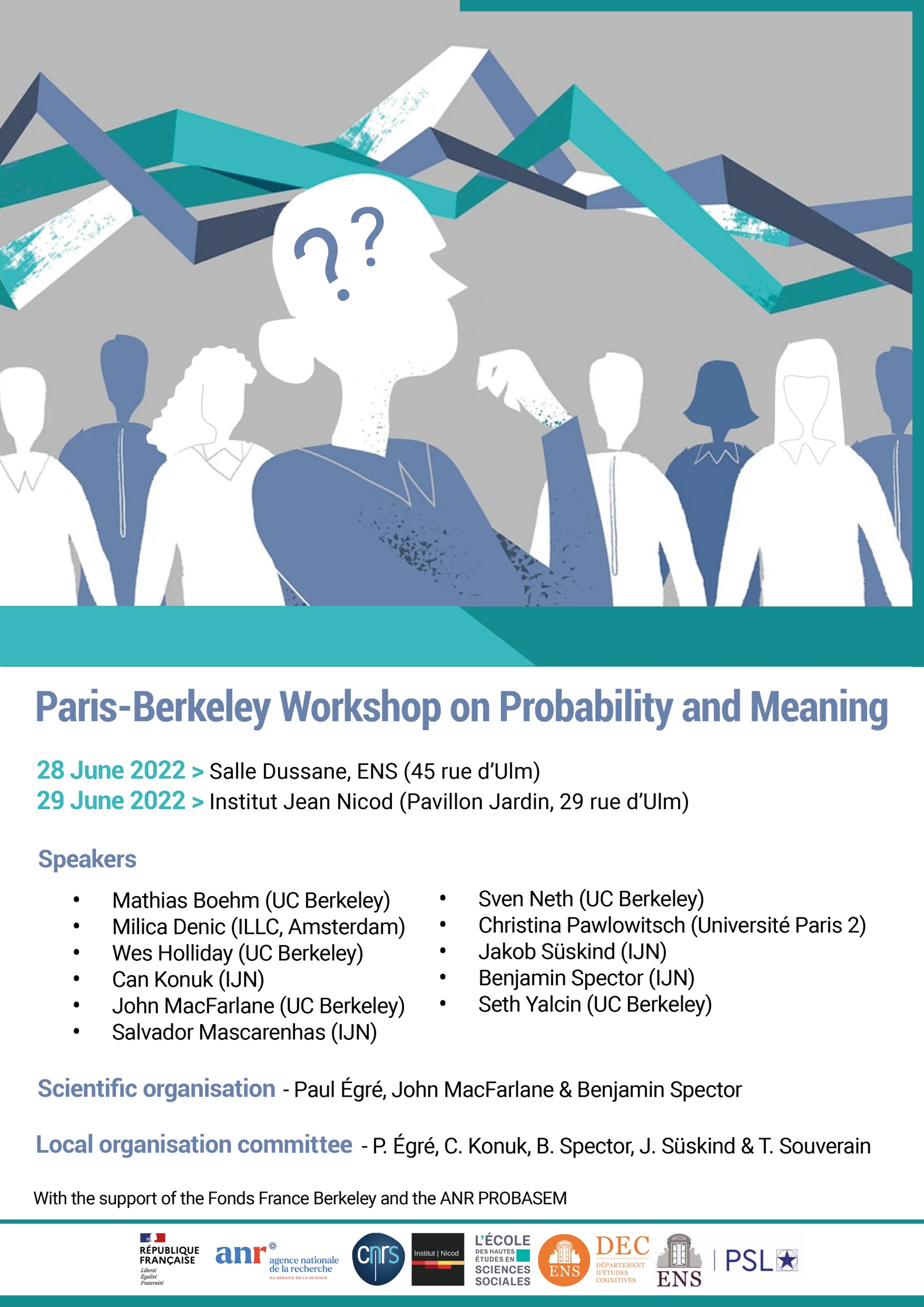

Paris-Berkeley Workshop on Probability and Meaning | June 28-29, 2022

The goal of this bilateral Paris-Berkeley workshop is to bring together philosophers, linguists, and game-theorists interested in the probabilistic treatment of meaning. The talks will report on various work in progress concerned with implicatures, modals, uncertain inference, and confirmation theory.

Date : June 28-29, 2022

Location : ENS. Salle Dussane (45 rue d’Ulm) on June 28 and at the Institut Jean-Nicod (Pavillon Jardin, 29 rue d’Ulm) on June 29.

Organized by Paul Egré, John MacFarlane, and Benjamin Spector. With the support of the France Berkeley Fund, and the ANR project PROBASEM

Speakers

- Mathias Boehm (UC Berkeley)

- Milica Denic (ILLC, Amsterdam)

- Annika Schuster (University Düsseldorf)

- Can Konuk (IJN)

- John MacFarlane (UC Berkeley)

- Salvador Mascarenhas (IJN)

- Sven Neth (UC Berkeley)

- Christina Pawlowitsch (Université Paris 2)

- Jakob Süskind (IJN)

- Benjamin Spector (IJN)

- Seth Yalcin (UC Berkeley)

Full Program

28 Juin, salle Dussane

- 9:00-10:00 - Mathias Boehm - Updating Context Probabilism

Coffee break

- 10:20-11:20 - Milica Denic - Probabilistic informativeness of alternatives in implicature computation

- 11:30-12:30 - Annika Schuster - Probability and Typicality

Lunch break

- 14:00-15:00 - Christina Pawlowitsch - Meaning in Costly-Signaling Games

- 15:00-16:00 - Benjamin Spector (TBA)

Coffee break

- 16:30-17:30 - John MacFarlane - Do Vague Utterances Communicate Probabilistic Information ?

19:30 - Social dinner

June 29, salle du Pavillon Jardin, IJN

- 10:00-11:00 - Sven Neth - Almost Certain Modus Ponens

Coffee break

- 11:20-12:20 - Salvador Mascarenhas - Question-answer dynamics and confirmation theory in reasoning without language

Lunch break

- 14:00-15:00 - Can Konuk - Confirmation Theory and Causality

- 15:00-16:00 - Jakob Süskind - Looking back at the Popper-Miller Paradox

Coffee break

- 16:30-17:30 - Seth Yalcin - Iffy Knowledge and Probabilistic Knowledge

Abstracts

Mathias Boehm

"Updating Context Probabilism"

In this paper I wish to investigate assertions of sentences of the form ’the probability of φ is x’, such as

(1) The probability of rain in Paris tomorrow is .2.

In particular I will be focusing on the question of how to best model the dynamics of such sentences, that is how to best model what assertions of such sentences contribute to the context of a conversation. I will take recent views on probabilistic vocabulary — to which I will collectively refer to as context probabilism — as a starting point (see Moss, 2018 ; Yalcin, 2012). While context probabilism gives rise to a plausible semantics for sentences such as (1) and (paired with an appropriate notion of consequence) explains a range of desirable entailment data, we will see that with respect to modelling the dynamics of such sentences we could do better. I will then argue that we should update context probabilism with an unorthodox (but conservative) view about assertion. According to this view, asserting a sentence is (roughly) a proposal to change the context in a minimal way to one which supports the asserted sentence (where a sentence is supported by a context just in case the context contains enough information to settle the question of whether the sentence holds or not). After the proposal is on the table I will show that updating context probabilism in this way will preserve its benefits while providing us with a more desirable account of the dynamics of sentences containing epistemic vocabulary which includes, but is not restricted, to probabilistic expressions.

Milica Denic

"Probabilistic informativeness of alternatives in implicature computation"

How do logical and probabilistic reasoning interact with language interpretation ? I will discuss this question in the context of a case study on scalar and ignorance implicatures. All models of these inferences rely on the existence of a set of alternative utterances that the speaker could have said instead of the original assertion. The central question in modeling these inferences is thus : what counts as an alternative for a given sentence ? I will argue that probabilistic informativeness of alternatives plays a central role in determining which alternatives feed implicature computation, but that the computation of probabilities needs to be blind to some aspects of contextual knowledge.

Annika Schuster

"Probability and Typicality"

In my talk, I will present a rational reconstruction of prototype concepts : probabilistic prototype frames (PPFs). As its name suggests, this model has three components : 1) the frame theory of concept representation, 2) the prototype theory of conceptual content, and 3) probabilities. Frames offer a flexible and fine-grained way to represent conceptual content and are therefore the representation format of choice. The prototype theory of concepts stresses the importance of typical properties for categorisation, which explains why some subcategories are perceived to be more typical for a category than others. For example, apples are more typical for the category fruit than pumpkins, because they have more typical properties of the fruit category : e.g., they taste sweet and are small, while pumpkins taste earthy and are big. In addition, some property dimensions seem to contribute more to typicality than others : a fruit that is big and tastes sweet, like a watermelon, is perceived to be more typical than a fruit that is small and tastes savoury, like an olive – the TASTE dimension seems to contribute more to typicality than the SIZE dimension. The difference in typicality contribution is accounted for by quantifying the properties represented in prototype frames with subjective probability estimations. This idea comes from Gerhard Schurz’ (2001, 2005, 2012) evolution-theoretic account of normality. He argues that for all categories that refer to entities shaped by evolution, there is a systematic connection between normality statements (“As are normally Bs” or “As typically have property B”) to statistical majority ; they imply that the conditional probability of B given A, pr(B|A), is high

I will present the theory behind PPFs and empirical data we collected to assess their cognitive plausibility and, more generally, the relationship between typicality and probability. Then, I will compare PPFs with other influential parametric prototype accounts : the family resemblance score (Rosch & ; Mervis, 1975) and the contrast model (Tversky 1977, Smith et al. 1988).

Can Konuk

"Confirmation Theory and Causality"

Confirmation Theory (Crupi et al. (2008) ; Tentori et al. (2013)) is a descriptive paradigm that captures a broad family of known deviations from Bayesian rationality. Given a set of hypotheses and a body of evidence, reasoners tend to prefer the hypothesis whose probability is most increased by the evidence, rather than the one with the highest posterior probability given the evidence.

An impending question is why do humans engage in Confirmation behavior ? One explanation is that subjects engage in question-answer dynamics and Relevance reasoning with the evidence (Sablé-Meyer and Mascarenhas, in preparation).

Here I want to advance a complementary explanation in terms of Causal reasoning. Humans track Confirmation relations between hypotheses and evidence because they treat hypotheses as Causal explanations for the evidence. Causal hypotheses do a better job at reducing uncertainty and surprisal upon new observations, as they allow one to predict new observations before one knows the full joint probability distribution over relevant variables. The predictions that Causal hypotheses allow are of a Confirmatory nature : an event is known to be more probable in the presence of its cause, even before we know the conditional probability of the event given that cause. Hence treating hypotheses as Causal explanations should predict that subjects will engage in Confirmation strategies when evaluating evidence.

I present a few examples illustrating these points and a work-in-progress experimental design meant to test certain predictions of this account.

John MacFarlane

"Do Vague Utterances Communicate Probabilistic Information ?"

It is tempting to suppose that an utterance of the vague sentence "Bill is tall" communicates probabilistic information about Bill’s height. It is not controversial that such an utterance can change a listener’s credences about Bill’s height. But if we are to say that this credal change is what is communicated by the utterance---what the speaker means and the listener apprehends---then we need an account of how the listener can recognize the intended change. Several authors have suggested that the RSA model, a Bayesian approach to pragmatics, can serve this purpose (see Lassiter and Goodman, "Adjectival vagueness in a Bayesian model of interpretation," 2017 ; Égré, Spector, Mortier, and Verheyen, "On the Optimality of Vagueness," ms. 2021). I will explain how the RSA account can be applied to vague adjectives, and then raise several problems for this approach. These problems are largely specific to vagueness and do not challenge other applications of RSA. After exploring some (ultimately unsuccessful) ways in which these problems might be solved within the RSA framework, I will sketch what I take to be the right account of the contents communicated by vague utterances and the probabilistic information they convey.

Salvador Mascarenhas

"Question-answer dynamics and confirmation theory in reasoning without language"

In a range of probabilistic reasoning tasks, humans seemingly fail to choose the option with the highest posterior probability (i.e. the highest chance of being true). For example, in their seminal 1973 article, Kahneman and Tversky presented human participants with descriptions of individuals, and observed that participants produced judgments of the probability that those individuals belonged to one of two professions (lawyers vs. engineers) while seemingly ignoring the prior probabilities of those two categories in the sample at hand. One family of theories hold that human reasoners in such decision tasks ask themselves to what extent a piece of information supports a particular conclusion (Crupi et al. 2008, Tentori et al. 2013). In this view, participants ignore prior probabilities in the lawyers-engineers paradigm because they are interested in the extent to which the description supports a lawyer or an engineer hypothesis, regardless of prior probabilities. This confirmation-theoretic view has been extremely successful at modeling human behavior in many probabilistic reasoning tasks, but a justification for it is lacking to this day. That is, however rational (Bayesian) confirmation-theoretic strategies might be as means of assessing the extent to which some evidence supports a hypothesis, it is an open question _why_ humans would engage in such a process, when at least at first blush the most rational strategy in a decision task of this kind is to identify the option with the highest posterior probability, which entails taking prior probabilities into account.

In this talk, I propose that confirmation-theoretic behavior is a result of question-answer dynamics, which are pervasive in human reasoning (Koralus & Mascarenhas 2013, 2018 ; Sablé-Meyer & Mascarenhas 2021), and are independent of narrowly linguistic cues. Again returning to the lawyers-engineers example, I argue that participants consider the description they are given as a hint at an answer, as if uttered by a cooperative and well-informed speaker, meant to help them determine which of the two alternatives is most likely to be true. In this framing of the task, confirmation-theoretic behavior is theresult of relevance-based reasoning, as has been formalized in various ways within the literature on linguistic pragmatics. Additionally, I show that question-answer dynamics of this kind are operative in deductive reasoning as well, and that they do not wholly depend on language. I present results from two experimental paradigms, one on deductive reasoning (Chung et al. to be presented at CogSci 2022 ; Martín et al. ongoing) and the other on probabilistic reasoning (Sablé-Meyer et al. under review), where all of the crucial information is conveyed pictorially. While these visual paradigms mitigate the rate of surprising responses, significant portions of participants still deployed the question-answer reasoning strategy of interest, despite the minimal linguistic cues. The resulting view unifies a wealth of data on probabilistic reasoning with recalcitrant puzzles from deductive reasoning, under a theory that recruits mathematical tools from formal semantics and pragmatics and applies them to linguistic and non-linguistic reasoning. My presentation concludes with exploratory remarks on a key open question in this research program : are the question-answer dynamics at hand a result of bona fide online pragmatic reasoning, or are they indicative of an even deeper role for question semantics in human cognition, absent concrete pragmatic pressures ?

Sven Neth

"(Almost) Certain Modus Ponens"

Consider the following inference : "It is maximally probable that P, if P, then Q, therefore, it is maximally probable that Q." Despite appearances to the contrary, I argue that this inference is invalid, with interesting consequences for the semantics of ’certain’ and ascriptions of belief and knowledge.

Christina Pawlowitsch

"Meaning in Costly-Signaling Games "

Costly signaling has been advanced as an explanation for a remarkably wide range of phenomena, from educational credentials as a signal for productivity in the job market (Spence 1973), over the evolution of ``handicaps’’ (Zahavi 1975 ; Grafen 1990), to politeness in language (van Rooy 2003). Game theory provides a coherent modeling framework by explicitly formalizing the interaction as a game with incomplete information. So far neglected, but in fact crossing into many of the debates in relation to costly signaling---for instance, whether a costly signal always perfectly reveals the ``good’’ type or whether there can be ``cheating’’---are the semantic properties of equilibria in costly-signaling games. Indeed, what makes costly-signaling games an interesting modeling tool from a semantic point of view is that the meaning of a signal is not given ex-ante (as an assumption of the model), but arises endogenously, in equilibrium, as a function of the parameters of the model---notably, the costs of the signal for different types, the benefits of success, and the prior probability distribution over types. In this talk, I will investigate the properties of the equilibrium structure of costly signaling games from this semantic point of view. I will do so by looking at a minimalistic model with two different types (``high’’ and ``low’’), two signals (the presence and the absence of a costly signal), and two different reactions to signals (``accept’’ or ``do not accept’’). I will exemplify the explanatory potential of this game-theoretic modeling framework by discussing two applications : politeness in language and style-shifting.

Jakob Süskind

"Looking back at the Popper-Miller Paradox"

In a 1983 Nature article, Karl Popper and David Miller presented a paradoxical-looking result purporting to show that probabilistic confirmation is never inductive. The main thesis of Popper and Miller does not seem to have convinced most contemporary authors. Nonetheless, the discussions surrounding it have elicited new interesting questions about the notion of “parthood” among propositions. What does it even mean for one proposition or claim to be “part” of another proposition ; what does it mean for two propositions to have (or not to have) a “common part”, etc. In looking back at the discussions surrounding the Popper-Miller paradox, I will try to show how a result in probability theory has given rise to questions about the meaning of parthood that are still open today and cannot comfortably be ignored by confirmation theory and related programmes.

Benjamin Spector

"Exhaustivity, Anti-exhaustivity and the effect of prior beliefs in probabilistic models of pragmatics"

Joint work with Alexandre Cremers and Ethan Wilcox

During communication, the interpretation of utterances is sensitive to a listener’s probabilistic prior beliefs, something which is captured by one currently influential model of pragmatics, the Rational Speech Act (RSA) framework. In this paper we focus on cases when this sensitivity to priors leads to counterintuitive predictions of the framework. Our domain of interest is exhaustivity effects, whereby a sentence such as ’Mary came’ is understood to mean that only Mary came. We show that in the baseline RSA model, under certain conditions, anti-exhaustive readings are predicted (e.g., ’Mary’ came would be used to convey that both Mary and Peter came). The specific question we ask is the following : should exhaustive interpretations be derived as purely pragmatic inferences (as in the classical Gricean view, endorsed in the baseline RSA model), or should they rather be generated by an encapsulated semantic mechanism (as argued in some of the recent formal literature) ? To answer this question, we provide a detailed theoretical analysis of different RSA models—some of which include such an encapsulated semantic mechanism—, and evaluate these models against data obtained in a new study which tested the effects of prior beliefs on both production and comprehension, improving on previous empirical work. We found no anti-exhaustivity effects, but observed that message choice is sensitive to priors, as predicted by the RSA framework overall. The best models turn out to be those which include an encapsulated exhaustivity mechanism (in line with other studies which reached the same conclusion on the basis of very different data). We conclude that, on the one hand, in the division of labor between semantics and pragmatics, semantics plays a larger role than is often thought, but, on the other hand, the tradeoff between informativity and cost which characterizes all RSA models does play a central role for genuinely pragmatic effects.

Seth Yalcin

"Iffy Knowledge and Probabilistic Knowledge"

I present some puzzles about ascriptions of conditional knowledge — about things of the form ‘A knows that if p, then q’. I suggest that they motivate the idea that knowledge is in some sense graded. I will ask how to understand the relevant grading, and in particular how to situate it relative to the ideas about probabilistic knowledge developed by Moss and by Goldstein and Beddor.

Local organizing committee

Paul Egré, Can Konuk, Benjamin Spector, Jakob Süskind & Thomas Souverain